Note: I will use FV abbreviation for first-visit and EV for every-visit.

I am reading the famous Barto Sutton Reinforcement Learning book (second edition), and after reading the following exercise, I stumbled upon a thought I can't seem to find a satisfactory answer to:

Exercise 5.5 Consider an MDP with a single nonterminal state and a single action that transitions back to the nonterminal state with probability p and transitions to the terminal state with probability 1 - p. Let the reward be +1 on all transitions, and let gamma = 1 (discount rate). Suppose you observe one episode that lasts 10 steps, with a return of 10. What are the first-visit and every-visit estimators of the value of the nonterminal state?

Now, I know the logical answer to this should be, for FV V(s) = 10 and for EV V(s) = 5.5

But my implementation finds V(s) = 1 for FV, because it considers a first-visit to the state to be the one visited in the backwards iteration implementation of the algorithm, not the actual order in which the state occurs in the given episode.

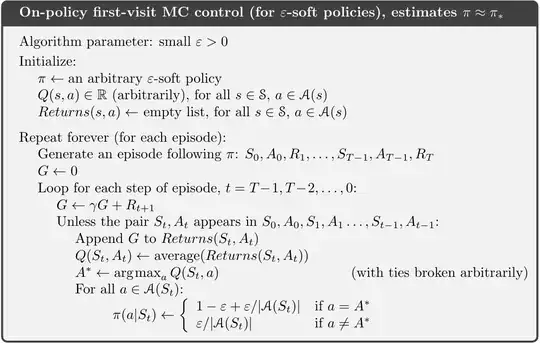

I assume the correct implementation should consider the natural order in which the state first occurs in the episode, even if we are iterating backwards for efficiency reasons (though I can't defend this assumption). I couldn't see this from the pseudocode, it is quite ambigous, since if we trace it (below, taken from the book), it seems like when interpreted literally, V(s) should = 1 in this example.

In short, my question is:

When we are doing MC FV, by FV do we mean the actual order in which the state first occurs in the episode, regardless of implementational details (i.e. forward/backward iteration).

If so, why prefer one to the other? What is the intuitive answer to this?

If I am not mistaken, I haven't seen this addressed in the book, nor anywhere else I could find, so I would be very happy if someone can give me insights into this.

Thank you.