In which types of learning tasks are linear units more useful than sigmoid activation functions in the output layer of a multi-layer neural network?

2 Answers

Sigmoid outputs are limited to the range of (0, 1), so it's not fit as the activation function in the output layer of a MLP where it's tasked to output unbounded values such as regression problems to forecast financial metrics like revenue, profit or stock price.

- 11,000

- 3

- 8

- 17

TLDR;

Problems in which you do not want to constraint the output range to $(0, 1)$ are better off using Linear Units rather than Sigmoid. An example of a scenario where such a setup can be used is in Regression tasks. For Example:

- Predicting a stock price

- Predicting height of a person etc.

As a side note, in cases where your output should be a continuous positive value, ReLU might be a better choice than Linear Layer.

What is the Sigmoid Function?

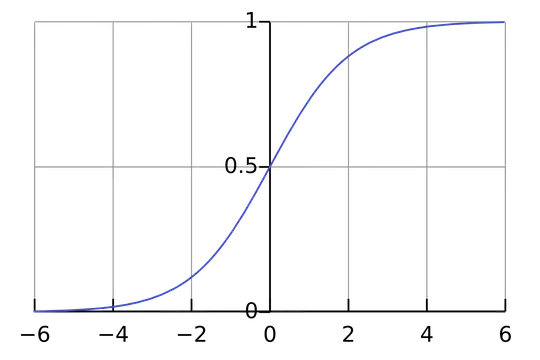

Any mathematical function which has a characteristic 'S-shaped' graph is said to be Sigmoid.

In the context of machine learning, we generally mean the Logistic Function when referring to Sigmoid Activation Function.

$$ f(x) = \frac{1}{1 + e^{-x}} $$

Machine Learning Use Case

Apart from numerical properties like Differentiability which makes it a suitable choice as an activation function for a neuron in Neural Networks, the reason why it is used in the output layer is due to the fact that the output of the logistic is constrained to the range $(0, 1)$

This makes it a good choice for classification tasks wherein the real number output in the range $(0, 1)$ can be interpreted as a class probability.

For example, if you are building a classifier that tells you whether a data point belongs to a class or not (for example, if an image is that of a cat or not), you can build your system in a way that the output is True if the output is $>0.5$ and False otherwise.

For regression tasks however, you generally want the model to be able to predict a range of real numbers, not necessarily constrained to $(0, 1)$. In this case, a simple linear layer will suffice.

Following links might be helpful in determining the specific activation function your your use-case:

- 71

- 5