As we know, classification problems are just a bunch of probabilities, commonly it comes from logits or softmax output. Performing $argmax$ to get the most favorable class by model, discarding us from some information. Such as the distribution of the probability distribution.

For deep lerning model that doesn't have reasoning capabilities, So I formulate new term based on model favorable and model asking for validation so I have four quadrants. It's based on purely softmax output only.

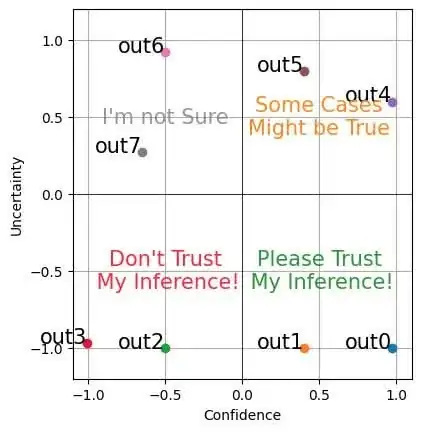

For example, here 8 softmax output with 3 classes:

Out0 [0.99 0.005 0.005]: Confidence: 0.98, Uncertainty: 0.00

Out1 [0.8 0.1 0.1]: Confidence: 0.70, Uncertainty: -0.00

Out2 [0.5 0.25 0.25]: Confidence: 0.25, Uncertainty: 0.00

Out3 [0.33 0.33 0.33]: Confidence: -0.00, Uncertainty: 0.01

Out4 [0.99 0.009 0.001]: Confidence: 0.98, Uncertainty: 0.80

Out5 [0.8 0.19 0.01]: Confidence: 0.70, Uncertainty: 0.90

Out6 [0.5 0.49 0.01]: Confidence: 0.25, Uncertainty: 0.96

Out7 [0.1 0.45 0.45]: Confidence: 0.18, Uncertainty: 0.64

Rescaling that range from $[0,1]$ to $[-1, 1]$ for visualization, draws:

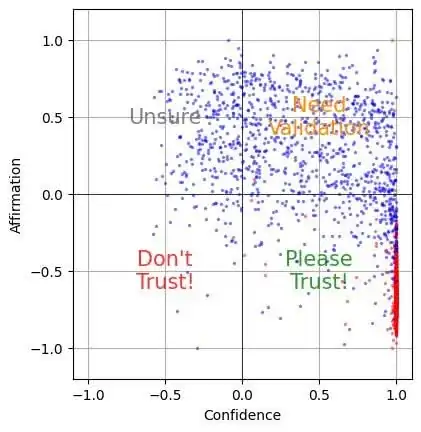

Then I tried with real data of MNIST handwritten digit of 10 classes. Focusing on label number 1, subjevtively model should be easy for him to classify it, but what if I ask him for something unusual that never seen before, it's 90° degree rotate version of number one.

As I expected, model should be wrong to classify something unusual which is rotated version of number one, and therefore leading to low confidence and high needed assistance.

If I visualize with the four quadrants, it's clear that the blue (which is rotated sample) is seeking for the validation or assistance compared to red (the original).

Therefore this is useful for critical inference such as medical diagnoses that doesn't need ground truth for explainable AI.

The question is, what is the better description to represents x-axis and y-axis?

As you see I have inconsistent to use the terms for y-axis:

- Uncertainty (I think it doesn't fit with my definition)

- Affirmation (Maybe there is another better)

- Assertion

- Assistance

- Vagueness

So, what is the best name for this? (no, this is not opinion question, it's objective question that intersect with philosophy)