LLMs like GPT-3 typically use subword tokenization, for example, Byte Pair Encoding for GPT models. These methods break down words into smaller pieces so that the model doesn't just work with whole words but also with parts of words (subwords), which are commonly seen in training. Afterwards LLMs generate embeddings for these tokens.

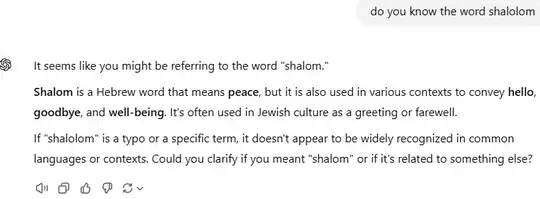

Therefore if you type in a made-up or novel word, ChatGPT might still generate something that seems reasonable because the underlying model knows the typical expected words formation (e.g., prefixes, suffixes, or common word parts) and subword tokens association in a probabilistic way, and uses this knowledge to predict the likely continuation of the text. For example, if a typo like "rel8ivity" appears in a prompt at inference time, the model after tokenization still recognizes "rel","8", and "ivity" as distinct tokens associated to "relativity" due to similar subword representations in LLM's enormous training corpus, most likely from "rel" and "ivity" tokens. This allows LLMs to further see through minor typos and still associate the most probable relevant meanings.