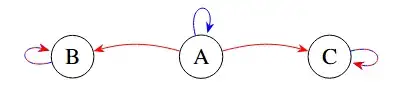

Consider the chainworld above with two actions, move (in red) and stay (in blue). Moving in A is stochastic: the agent moves to B with probability $p$ and to C with probability $1-p$. Moving or staying in B and C is irrelevant.

Clearly, there exists an optimal policy depending on the reward in A, B, C, the probability $p$, and the discount factor $\gamma$.

However, can the policy performance be defined? Consider the policy that does "move" in A: the induced Markov chain has two stationary state distributions $\mu_\pi$ (agent stays forever in B or C). Is the policy performance of this policy $J^\pi = \sum_s \mu_\pi(s) V^\pi(s)$ defined? We know that is it defined when the MDP is ergodic and $\mu_\pi(s)$ exists and is unique, but what about this scenario? The problem I see is that there are two $\mu_\pi(s)$ but $J^\pi$ does not account for that. Intuitively, I would simply weigh the sum by the probability that the agent induces the stationary distributions, i.e., $J^\pi = \sum_{\mu_\pi} p(\mu_\pi) \sum_s \mu_\pi(s) V^\pi(s)$. In the example above, $p(\mu_\pi)$ would just be $p$, but in more complex MPDs it may depend on multiple probabilities.

I haven't found anything like that in RL literature, and I was wondering why.