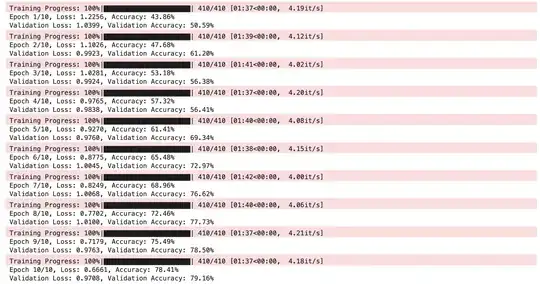

I'm training a deep learning model in PyTorch for a classification problem, and I’ve noticed that the validation accuracy is consistently higher than the training accuracy throughout the training process. This behavior persists even after tuning the model. Here’s a quick summary of my problem and setup:

Dataset: The dataset is imbalanced, particularly for two of the classes. This imbalance might be affecting the model’s performance.

The architecture includes (HAR problem):

A CNN feature extractor with two convolutional layers: First layer: 64 filters, kernel size = 10, stride = 10. Second layer: 32 filters, kernel size = 5, stride = 2.

A dense layer for a compact latent space creates a shared feature representation between different branches.

LSTM layer for sequence modeling. A final dense layer for classification.

Regularization: I’ve experimented with different dropout rates (e.g., 0.3, 0.5, 0.6), primarily in the LSTM layer and last CNN, and it does impact the stability of the training process.

Training Details:

Loss Function: Cross-entropy loss. I modified the cross-entropy loss to account for each branch's predictions in the latent space representation Optimizer: Adam with a learning rate of 0.0005. Batch size: 32. Training for 10 epochs.

What I’ve Tried:

Dropout Tuning: Tried dropout values between 0.2 and 0.6 in the LSTM and fully connected layers. Reducing Model Complexity: Lowered the number of neurons in the LSTM, which stabilized validation accuracy but didn’t resolve the discrepancy. Class Imbalance Considerations: Since the dataset is imbalanced, I suspect this might be causing problems.

Is it normal for validation accuracy to be consistently higher than training accuracy in such a scenario? Could the class imbalance or dropout be the primary reason for this behavior? Is this model overfitting?