I have a question about whether the Deterministic Policy Gradient algorithm in it's basic form is policy-based or actor-critic. I have been searching for the answer for a while and in some cases it says it's policy-based (like in a post here a while ago), whereas in others it does not explicitly says it's an actor-critic, but that it uses an actor-critic framework to optmize the policy.

I know that actor-critic methods are essentially policy-based methods augmented with a critic to improve learning efficiency and stability.

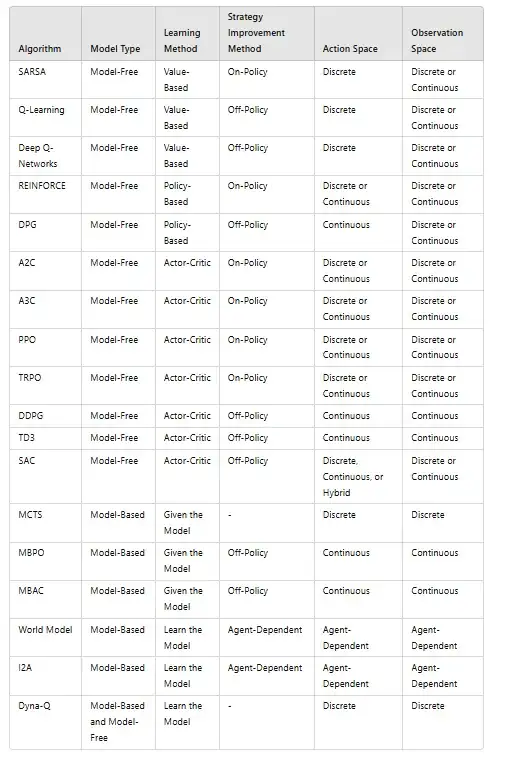

I'm asking this question as well because I'm trying to make a classification of RL algorithms for a project of my own. I attach the table, you can point out any mistakes I might have made or things that could be improved: