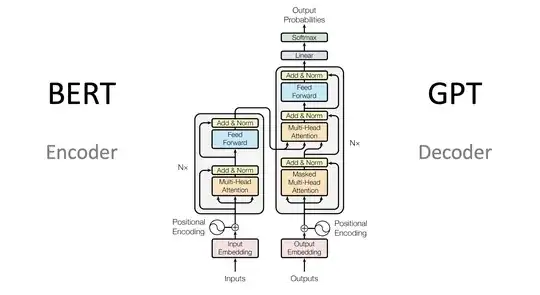

I wonder why does GPTs use decoder only architecture, instead of full Encoder Decoder architecture. In full encoder-decoder transformer architecture, we convert the input sequence to a contextual embeddings once, and then output is generated in autoregressive manner, but in decoder only case input is given at each step of predicting next word. So, using full architecture seems less time taking and efficient. Also, in decoder only architecture we cannot get the bidirectional context of input, which may be useful for contextual understanding.

Asked

Active

Viewed 488 times

0