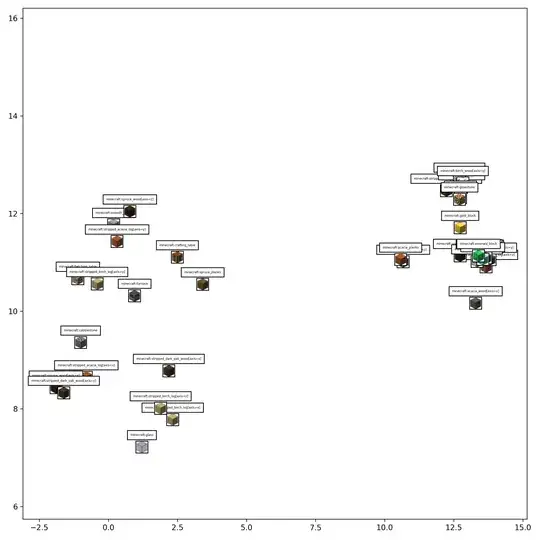

I've curated a dataset of player-made Minecraft builds. Each unique Minecraft block is tokenized and treated as a unique "word" like in NLP. I've trained a Skip-Gram model on the dataset (using context "cubes" and target "blocks" as opposed to windows and words). Plotting the embeddings, they are meaningful as nearby embeddings are similar blocks.

Now, I'm attempting to train a Variational AutoEncoder on the embedded builds. Where each build data point is converted from the tokens to the pre-trained embeddings from the SkipGram model. My VAE is fully convolutional, modeled after the encoder and decoder parts of Stable Diffusion, only upgraded to 3D. Therefore, each data point is of size:

(Batch Size, Depth, Height, Width, Embedding Dimension)

I'm having trouble training the model, specifically with the reconstruction error loss term. My KL-divergence seems to be working well. I've tried pytorch's CosineEmbeddingLoss since the embeddings are generally nonlinear, weighting the loss of each block according to it's probability of occurring in the build. I've also tried MeanSquaredError and LogCosh and those didn't work very well.

I've perused many repositories that implement a VAE, and nearly all of them use either BinaryCrossEntropy or simply CrossEntropy. Would those loss functions would be appropriate for my model? My embeddings are real-valued. The task is somewhat of a classification problem, where there are around 4000 unique classes due to the number of blocks in Minecraft. I've gone with embeddings because if the model outputs a similar block compared to the actual block, that would still be a viable build. I'm beginning to think that this task is much more difficult than I anticipated.

Perhaps another architecture would be more optimal? I lack a necessary intuition with generative modeling as it's not the kind of ML I usually write. Compute is not a limitation as I have access to the H100s at my university.