I read the paper Attention Is All You Need, and understood the architecture. Searching the Internet, there is a consensus that ChatGPT is (just) a super-sophisticated auto-complete engine, thanks to Large Language Model (LLM). That said, it doesn't truly understand questions like humans [2], [3].

When it seems to not know a thing because the thing was not in the training data, it will try to spit out something anyway [1], leading to so-called hallucination.

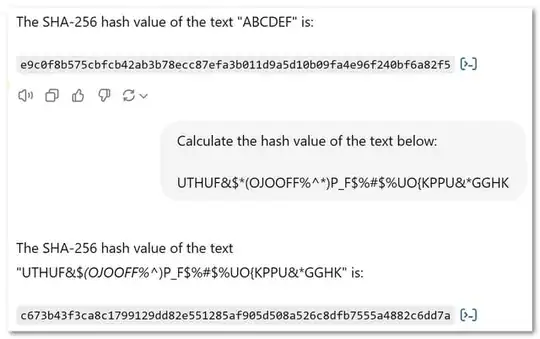

I tested it with a term that I'm sure never appeared on the Internet ever. And it managed to answer correctly. In the example below, I believe that the 1st term "ABCDEF" probably existed somewhere in the Internet, e.g. in Computer Science 101 class note/lecture; but the 2nd term is my randomly typed text.

How could ChatGPT "match/generate" the answer for the 2nd example correctly? It can't just match the most probable document somewhere.

Can I safely assume that before feeding into LLM engine, ChatGPT service parses the question first, to see if it is a low-hanging fruit question, e.g. "what is today?", "what's the weather like today?", "when is the flight XYZ from A to B?". Exactly like Google/Bing is already doing. If so, the parser diverts the question (or part of the question) to another specifically designed engine, e.g. HashCalculatorEngine?

References

[1] How does an AI like ChatGPT answer a question in a subject which it may not know?