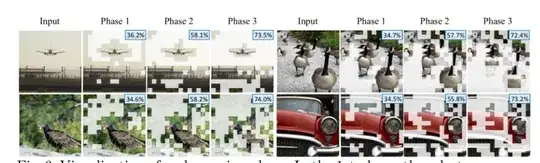

I am currently looking for ways to improve Transformers performance in image processing, especially in image segmentation. I found this paper by Kong, Z., et al called "SPViT: Enabling Faster Vision Transformers via Latency-aware Soft Token Pruning" where in their method, TLDR, they run multiple rounds of Transformer training and pruning until they reached a consensus between accuracy and training weight. So basically the what the paper do is trying to preserve accuracy with lighter training by taking unimportant tokens of which each Transformer heads are making.

From what I understand, they first run transformers on 'maximum performance cost' first because the first tokens are unpruned, then the cost will lower because of pruned tokens and reached the expected lighter yet still accurate training. So from the images, I expect maximum training cost which I'm actually trying to avoid and the cost will be lower as the tokens are more pruned in Phase 3

My question is, is there a way I can 'skip' the 'maximum performance Transformer training' by inducing a model from somewhere else like from segmenter-github-by-Strudel,et al.. But isn't that called inference? How to transfer the 'context dictionary' from one model to another?

So I can transfer these 'contexts' from image I just inference with pretrained model to the Transformer in SP-ViT algorithm

What do I actually look for?