dear community,

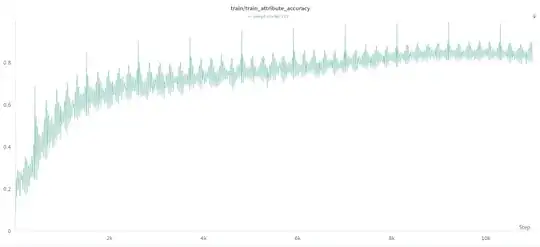

I am trying to reproduce the result of Allen-Zhu's Physics of Language Model paper 3.1 (https://arxiv.org/abs/2309.14316). This paper is mainly about training a toy GPT-2 model on synthetic data of individual biographies and performing further experiments. One important metric during the model's training is to track the prediction accuracy of specified attributes mentioned in the texts, such as an individual's birthdate, major, working company and so on. I EXPECT it to rise as training goes just like the overall next-token prediction accuracy, but ACTUALLY, it is very unstable and shows strange periodicity, just as the following image. The distance between the next peaks is exactly nearly half an epoch.

I am wondering what could cause the problem. Could it be due to the data noise? But I should shuffle the data after each epoch. Or could it be simply because of a too-high learning rate / too small batch-size? The training hyperparameters are as:

I am wondering what could cause the problem. Could it be due to the data noise? But I should shuffle the data after each epoch. Or could it be simply because of a too-high learning rate / too small batch-size? The training hyperparameters are as:

- Training Steps: 80000 steps in total

- Model Architecture: GPT-2 but rotary embedding instead of a regular WPE (GPT-NeoX); 768 hidden_size, 12 heads and 12 hidden_layers.

- Learning Rate: 1000-step warmup to 1e-3, then cosine decrease but no smaller than 1e-4

- Optimizer: AdamW with weight decay 0.1, eps 1e-6

- Batch Size: 96

- Dataset Description: Biographies of different individuals are concatenated into 512-token length samples. After each time the 100,000 individuals are traversed, the order is shuffled.

Any suggestions or possible causes would be appreciated. Thank you for your time!