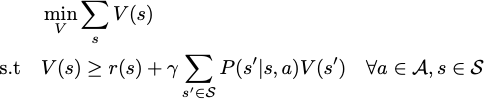

Aside from value iteration, we can use the following linear program to solve the optimal value function of an MDP.

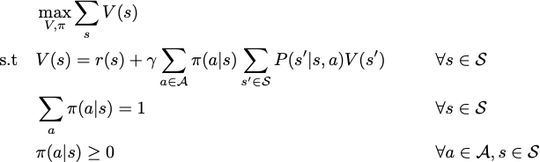

I am planning to put some constraints on the policies class that I consider, for example, state 2 and state 3 have to select the same action, etc. I want to explicitly add the policy as an optimization variable, and I created the following program with linear objective but quadratic constraints (bilinear to be specific).

To me, the constraints are essentially performing a policy evaluation, and the optimal policy is found through the maximization. However, I put this optimization problem into Gurobi and the algorithm has a hard time to converge. I am not sure whether the proposed optimization is right. More specifically, I am not sure whether the weights in the objective function can be arbitrary. Can anyone shed some light on my problem?