Gradient descent and back-propagation

In deep learning, gradient descent (GD) and back-propagation (BP) are used to update the weights of the neural network.

In reinforcement learning, one could map (state, action)-pairs to Q-values with a neural network. Then GD and BP can be used to update the weights of this neural network.

How to design the neural network?

In this context, a neural network can be designed in different ways. A few are listed below:

The state and action are concatenated and fed to the neural network. The neural network is trained to return a single Q-value belonging to the previously mentioned state and action.

For each action, there is a neural network that provides the Q-value given a state. This is not desirable when a lot of actions exist.

Another option is to construct a neural network that accepts as input the state. The output layer consists of $K$-units where $K$ is the number of possible actions. Each output unit is trained to return the Q-value for a particular action.

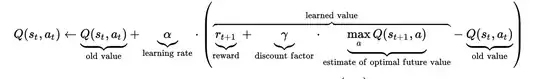

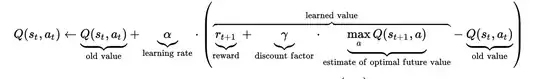

Q-learning update rule

This is the Q-learning update rule

So, we choose an action $a_t$ (for example, with $\epsilon$-greedy behavior policy) in the state $s_t$. Once the action $a_t$ has been taken, we end up in a new state $s_{t+1}$ and receive a reward $r_{t+1}$ associated with it. To perform the update, we also need to choose the Q-value in $s_{t+1}$ associated with the action $a$, i.e. $\max _{a} Q\left(s_{t+1}, a\right)$. Here, $\gamma$ is the discount factor and $\alpha$ is the learning rate.

Back-propagation and Q-learning

If we use a neural network to map states (or state-action pairs) to Q-values, we can use a similar update rule, but we use GD and BP to update the weights of this neural network.

Here's a possible implementation of a function that would update the weights of such a neural network.

def update(self, old_state, old_action, new_state,

reward, isFinalState = False):

The neural network has a learning rate associated with it.

It is advised not to use two learning rates

learningRate = 1

Obtain the old value

old_Q = self.getQ(old_state, old_action)

Obtain the max Q-value

new_Q = -1000000

action = 0

for a in self.action_set:

q_val = self.getQ(new_state, a)

if (q_val > new_Q):

new_Q = q_val

action = a

In the final state there is no action to be chosen

if isFinalState:

diff = learningRate * (reward - old_Q)

else:

diff = learningRate * (reward + self.discount * new_Q - old_Q)

Compute the target

target = old_Q + diff

Update the Q-value using backpropagation

self.updateQ(action, old_state, target)

In the pseudocode and in the Q-learning update formula above, you can see the discount factor $\gamma$. This simply denotes whether we are interested in an immediate reward or a more rewarding and enduring reward later on. This value is often set to 0.9.