I am new RAG Application. Currently I am working in a RAG application where I have to do Ingestion of PDF invoice document and have to fill-up some date from JSON structure and store in NOSQL DB.

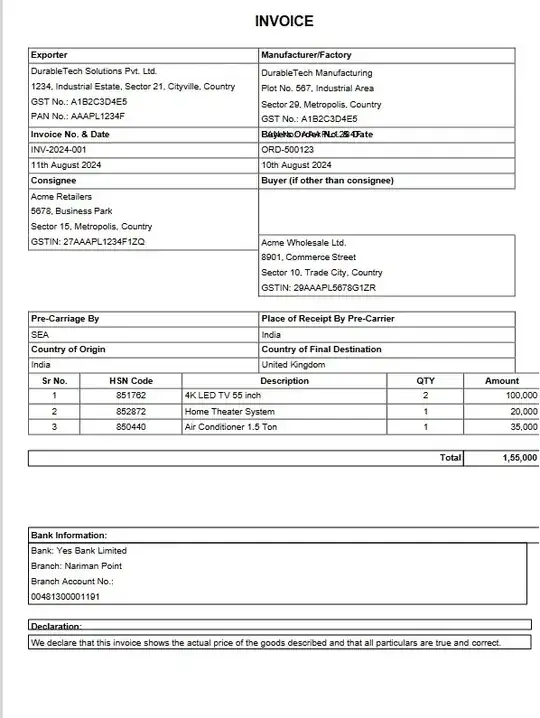

My PDF Invoice PDF is mostly contained tables based content. Either it is a single table or multiple table with rows and columns. Each data element I will fetch convert in JSON and store in NO SQL DB.

Now I am confused for a PDF where mostly we have tables and box or place holder to content data in that case which chunking process I should use so that I can get most meaning full data which may be will store in vector database , and after that do a similarity search and use those data for standard JSON preparation.

What I try :

according to https://medium.com/@anuragmishra_27746/five-levels-of-chunking-strategies-in-rag-notes-from-gregs-video-7b735895694d

I was trying with Agentic Chunking, but the output was not satisfactory as in my case pdf is mostly contained table data and data in box , example as you can find in the attachment.

Can any one suggest a suitable approach for chunking and embedding which may be I can use for this type of document where data are mostly given in format of table or box with some heading and then description as given in the attachment. Thanks in Advance....