I'm generating a ground truth density map set for an object counting task involving different object classes. The objects are labeled with bounding boxes (i.e [class, x, y, w, h]), which vary in size due to object type and camera angle. The bounding boxes are to be used only to help create ground truth - the purpose of the task is to predict density maps instead of bounding boxes.

I've seen a lot of literature discussing "geometry adaptive kernels", but they are often used on crowds of people. In these scenarios, the kernels are based on points marking 'heads' and the geometry adaptive part is based more on the distribution of points/people.

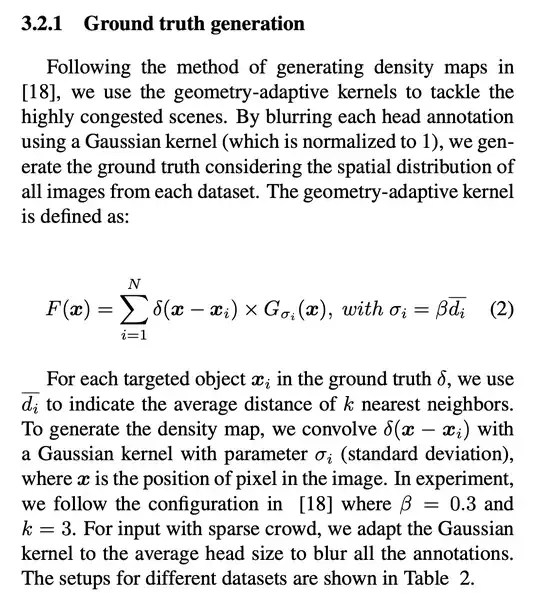

Section 3.2.1 from Li et al 2018 is an example (see screenshot at the end): https://openaccess.thecvf.com/content_cvpr_2018/papers/Li_CSRNet_Dilated_Convolutional_CVPR_2018_paper.pdf

Does anyone know of techniques they can point me to that use more complex kernel estimation for generation object density maps that takes object size, object class, and/or camera angle into account? Thanks