I am trying to implement WGANs from scratch.

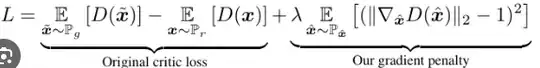

The loss function for the critic is given by :

which i implement in my code as L = average(real output) - average(fake output) + lambda*GP.

For calculating GP, i just backpropagated ones in the critic to get gradient w.r.t interpolated images, and did (l2_norm(gradient)-1)^2.

I understand that pytorch and tensorflow have some sort of automatic differentiation to calculate the gradients of the loss function. However, I am trying to manually backpropagate the gradient of the loss function.

For vanilla WGAN, the gradient that i backpropagate is -1/n for real images and +1/n for fake images. But I am having trouble understanding how to calculate the gradient of the loss function taking into account gradient penalty.

**How exactly do you apply gradient penalty after calculating it? What exactly should you backpropagate? **

Is there a different way to implement this by hand without using automatic differentiation? Or am i misunderstanding something? Any help is appreciated.

Edit: according to this repo, the training of the critic happens like this :

# Train with real images

d_loss_real = self.D(images)

d_loss_real = d_loss_real.mean()

d_loss_real.backward(mone)

# Train with fake images

z = self.get_torch_variable(torch.randn(self.batch_size, 100, 1, 1))

fake_images = self.G(z)

d_loss_fake = self.D(fake_images)

d_loss_fake = d_loss_fake.mean()

d_loss_fake.backward(one)

# Train with gradient penalty

gradient_penalty = self.calculate_gradient_penalty(images.data, fake_images.data)

gradient_penalty.backward()

But, what exactly is happening in gradient_penalty.backward() ?