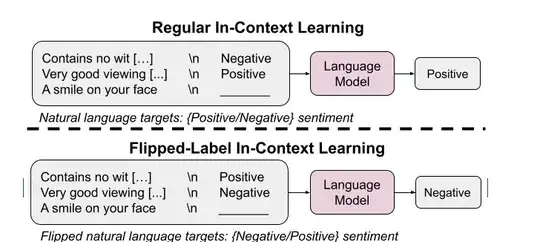

I came across a Google's blog (https://research.google/blog/larger-language-models-do-in-context-learning-differently/) discussing large language models (LLMs) and how we can overried their prior knowledge through in-context. Using examples where the labels contradict prior knowledge, they call it flipped-label in-context learning (for example, sentences containing positive sentiment labeled as “negative sentiment”).

I'm curious: how does the model is able to even "learn" (overried its priors) without changing its weights? Specifically, how does it adjust its understanding when faced with contradictory labels like positive sentences labeled as negative?