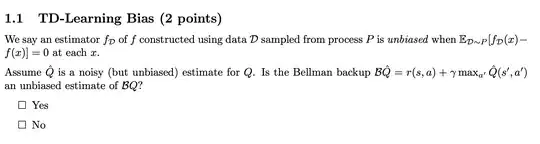

This is comes from cs285 2023Fall hw3.

In my opinion, if $\hat{Q}$ is unbiased estimate of $Q$, then $$ \begin{align} \mathbb{E}_{D \sim P}[B_{D}\hat{Q} - B_{D}Q] &= \mathbb{E}_{D \sim P}[r(s,a) + \gamma max_{a'}\hat{Q}(s', a') - r(s,a) - \gamma max_{a'}Q(s', a')]\\ &=\mathbb{E}_{D \sim P}[\gamma max_{a'}\hat{Q}(s', a')- \gamma max_{a'}Q(s', a')]\\ &= 0 \end{align}$$ So $\hat{Q}$ is unbiased estimate of $Q$ and the answer is yes. Is this right?