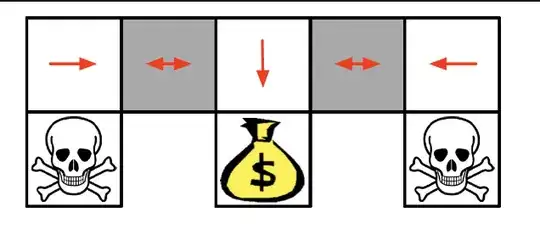

As an example to show the benefits of stochastic policy, I often have seen the below grid world example. Five blocks in a row. the first, third, and fifth are white(distinguishable states), and the second and fourth are gray(for agent, these two states are equivalent, non-distinguishable). positive reward if goes down in the middle state, negative reward if goes down in the first and the fifth states. They often say, in the gray region, it's best to put 0.5 probability to each left and right actions and it is possible only by stochastic policies.

Let Left = 0, Down = 1, and Right = 2 be action values. Let those three actions, left, down, right be available for all states, that down action in gray state will just let it stay with negative reward. My question is, for the gray region, if we use gaussian distribution for our policy, can we set up 0.5 probability for each left and right action? wouldn't it naturally make the probability to choose down action quite high as we only modify mean and variance value?

I just thought it's interesting that most RL paper seems to use gaussian distribution for stochastic policy but that distribution cannot even solve this simple setup, which is often used in teaching the benefits of stochastic policy. Or, am I wrong?