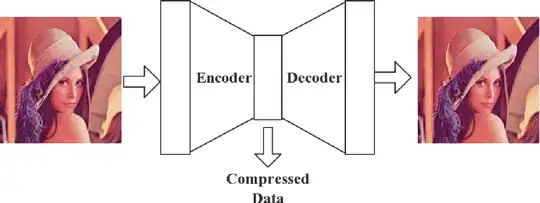

When a single image is assigned for training, an auto-encoder should be able to gradient-descend and find the full set of satisfactory weights that will reconstruct this image.

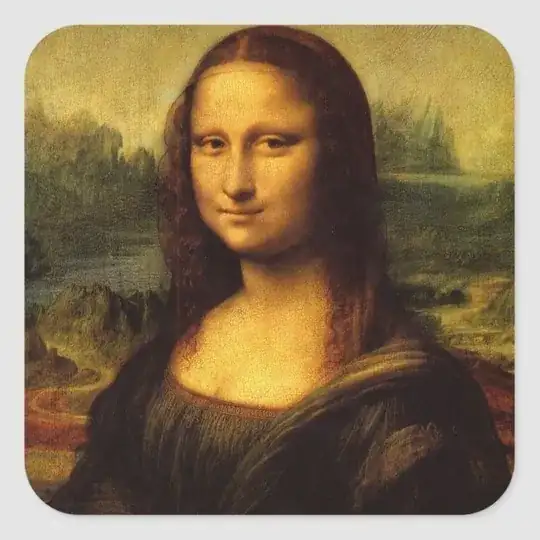

Suppose a second image is now assigned to the autoencoder:

Can the previously trained autoencoder weights (for image 1) now be reused immediately (without further training) to reconstruct the second image as well?