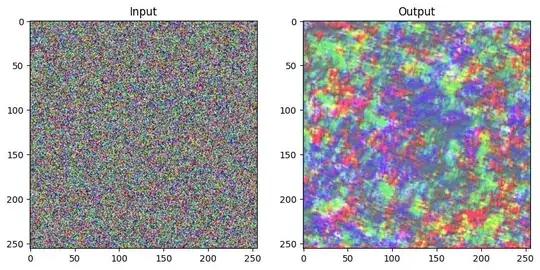

I am developing a particular implementation of VAE, and, how usually one does while implementing any architecture, I passed a random input to the model to test if everything worked fine (e.g. check for size mismatches, device shenanigans, etc..). While I usually just check that input, intermediate and final shapes are correct, this time I decided to also visualize the ouput on that random input, not expecting much more than pure noise.

And while of course it isn't anything semantically significant (especially since the input itself has no information, technically), it also isn't complete garbage, presenting itself way less random than the input, and clearly having regions where a certain tone prevails on others.

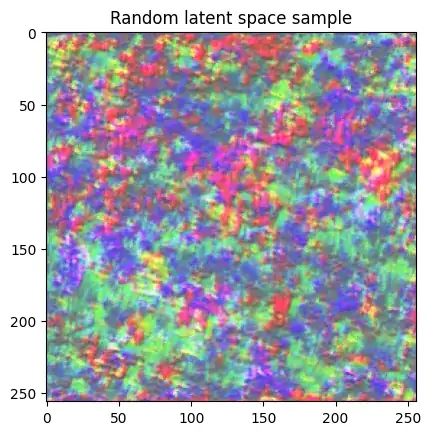

Out of curiosity, I also tested just the decoder part of the network, by passing a normal latent sample.

It seems clear that the main culprit of this curious behaviour is the decoder network, which would lead me to believe that something similar may also happen with decoder based models such as GAN's.

Now this leaves me with a burning desire to understand: why?

Of course, we don't really have any guarantees on the distribution of the output of a random network (or at least I think), and the decoder I am using is also a pretty complicated one, so God knows what those millions of random parameters are doing, but at the same time, I feel like I cannot be the first person to have wondered what kind of patterns are expected, if any.

The only reason I can think of that could maybe explain this behaviour, and I don't really have much to prove it, is that since there are so many inputs and the parameters to the network, it is easy to hit some combinations of layer_input/layer_weight for which some kind of cascade effect triggers, which in turn produces these regions.

I know this may be too much of an open ended question, but at the same time, I feel like it is too interesting not to ask, especially since I am not looking for answers specifically related to my model architecture but rather for a more general understanding of this phenomena. Is this behaviour expected? Could this be indicative of a good/bad model architecture or weight initialization? Is this just my primate brain looking for patterns where there really aren't any?

Thank you so much in advance for any responses!

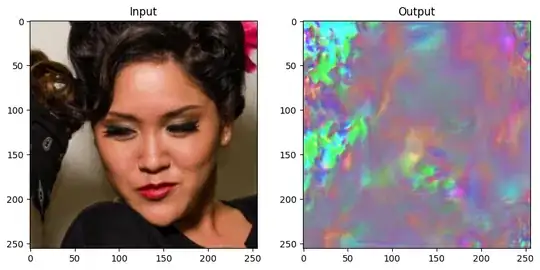

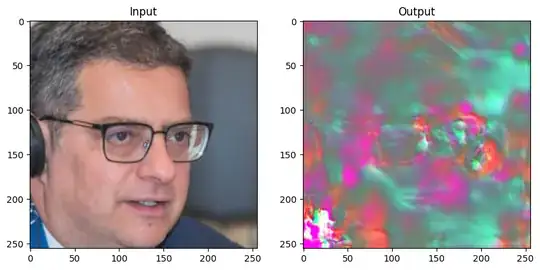

EDIT: I also tried on a non-random input (FFHQ-256 for those wondering), and in the output you can actually see that, inspite the network being untrained, one can make out some faint outlines of the face!