In the paper,

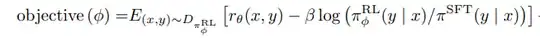

They write:

Now, is $y$ the full response or only the next token repsonse? One the one hand, the reward model expects full response, on the other hand they write 'per-token KL penalty'. So we sample the next token, or we sample the next full response?

Secondly, is the equation correct? After all it looks they only optimize for lower KL divergence, but where's the main optimization taken place? (The reward model is fixed in this equation)