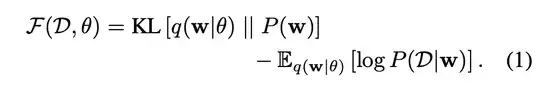

I've been trying to train a Bayesian Neural Network and I noticed that the KL loss (which enforces the prior) isn't changing over time. And it occurred to me that while in standard Bayesian inference the prior acts like an artificial dataset (e.g. beta(N,N) adds 2N more observations). In Bayesian Neural networks like Bayese-by-Backprop, the prior is just a regularizing term in the loss function (the KL loss term).

Which as I understand it means the KL loss term might never increase (depending on its weight), and thus uncertainty might never decrease, which contradicts the standard Bayesian inference behavior where the prior is guaranteed to lose it's importance as you get more data... Further more if you play with loss term weights then there is a question as to what weights are valid & how to prevent collapse to a fully deterministic network...

Please tell me I'm wrong, and explain how (hopefully) this is fully comparable to regular Bayesian inference as well as why KL-loss might not change during training?