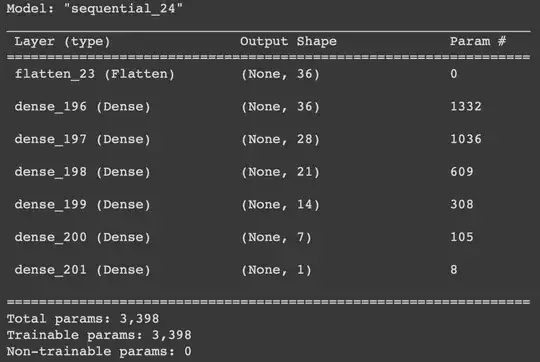

I've been training several types of MLPs with different optimisers and tuned them with keras's hyperband tuner. All of them follow this cone architecture:

All the networks were trained on the same dataset composed of 342k input examples and another different 62k examples for the validation (aprox 20% ratio). No example is shared between them. The data come from the same distribution :

KstestResult(statistic=0.06393057115770212, pvalue=1.8775454622663613e-165, statistic_location=-0.045045708259117134, statistic_sign=1)

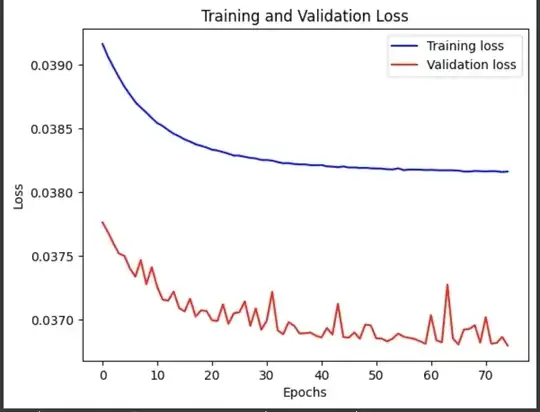

Whatever i try, the validation loss starts below the training one as show below in the graphs (the val_loss in the graphs is shifted by 0.5epch to account for the calculation of train_loss):

1st: Adadelta and mae

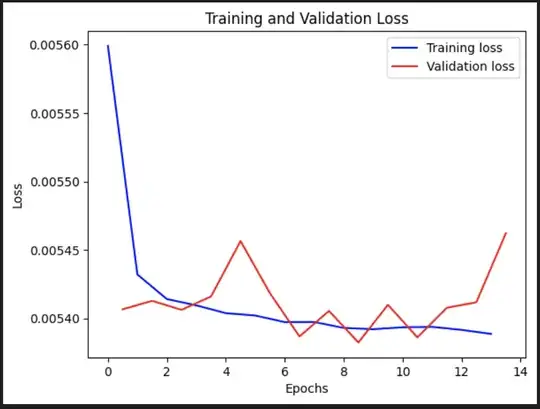

2nd: Adam and mse

2nd: Adam and mse

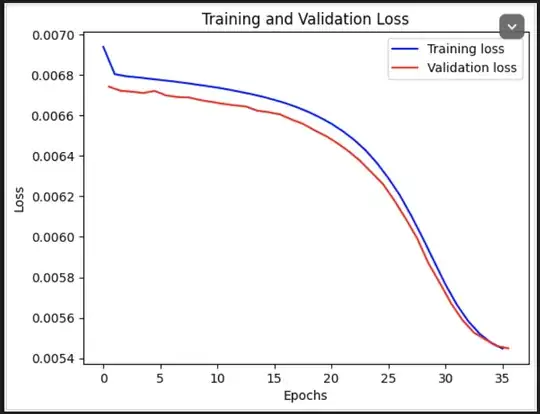

3rd: Adadelta and mse

3rd: Adadelta and mse

4th: Adam and mse + L1 Dropout

4th: Adam and mse + L1 Dropout

All the models have very similar rmse and accuracy and are almost usable, the 3rd one also performing the best out of the bunch. I'm curious why does the val_loss always start below the trainig one, and if this is a problem since it would technically mean that i'm overfitting the model from the start :-? Also what could i do to further investigate the issue and general advice. Thanks in advance for the replies!