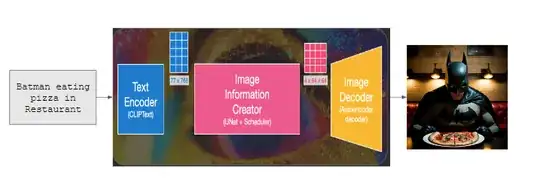

I'm interested in how text to image models like Midjourney and Dall-E work, where you enter a text prompt and get as output some images. I started reading some papers on it and stumbled upon "Denoising Diffusion Probabilistic Models" - https://arxiv.org/pdf/2006.11239.pdf.

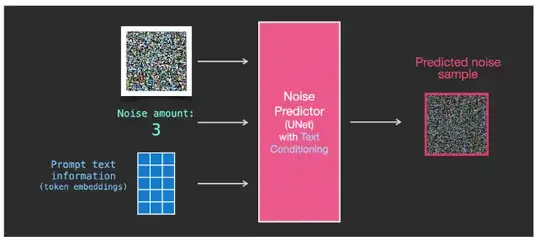

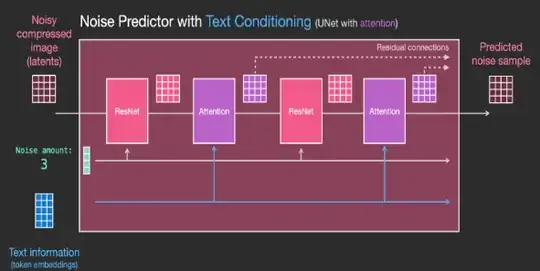

It describes the diffusion process and how there is a sequence of random variables, $x_t$, moving between them through a Markov chain, etc.

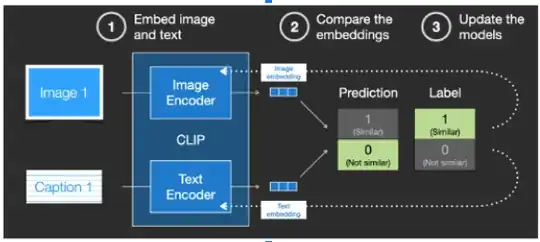

But neither in this paper nor in any others did I find an explanation for how the training data (which I assume is tuples of images and accompanying text) is actually used. How does it play a role in the likelihood/ objective function?