Some LLMs are trained on both CommonCrawl and Wikipedia/StackExchange. Why? Does CommonCrawl already contain Wikipedia/StackExchange?

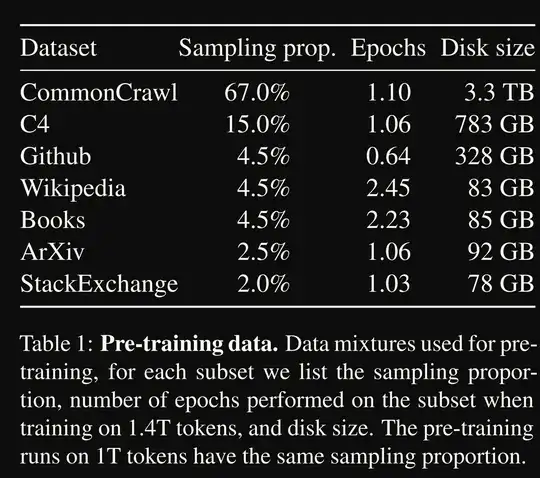

E.g., from the LLaMa 1 paper:

and from https://huggingface.co/datasets/togethercomputer/RedPajama-Data-1T:

| Dataset | Token Count |

|---|---|

| Commoncrawl | 878 Billion |

| C4 | 175 Billion |

| GitHub | 59 Billion |

| Books | 26 Billion |

| ArXiv | 28 Billion |

| Wikipedia | 24 Billion |

| StackExchange | 20 Billion |

| Total | 1.2 Trillion |

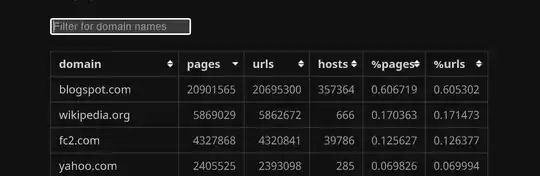

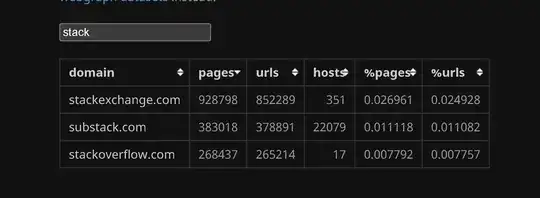

Looking at https://commoncrawl.github.io/cc-crawl-statistics/plots/domains, it seems that CommonCrawl includes the Wikipedia and StackExchange domains. But maybe it's incomplete?