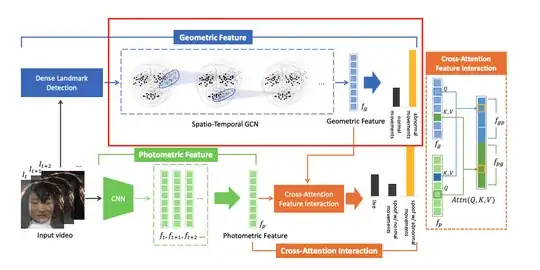

I need help implementing the model in this paper:

They have adopted spatio-temporal graph convolution operator in ST-GCN [section 3.1.2]. I've found there is popular libraries available for GCN: pytorch_geometric and torch_geometric_temporal, but I'm new to the graph NNs.

This is the overal network architecture in the paper, and I'm tring to implement the geometric feature part. For the dense landmark detection I'll be using mediapipe's FaceMesh landmarks (because the dense landmark detection model used in the paper is not available publicly).

Currently what I've written is as below:

import torch

from torch.nn import Linear

from torch_geometric.nn import GCNConv

from torch_geometric.typing import Adj, OptTensor

class GAIN(torch.nn.Module):

def init(

self,

in_channels: int,

hidden_channels: int,

out_channels: int,

):

super().init()

self._graph_conv_1 = GCNConv(

in_channels=in_channels,

out_channels=hidden_channels,

)

self._graph_conv_2 = GCNConv(

in_channels=hidden_channels,

out_channels=hidden_channels,

)

self._graph_conv_3 = GCNConv(

in_channels=hidden_channels,

out_channels=hidden_channels,

)

self._graph_conv_4 = GCNConv(

in_channels=hidden_channels,

out_channels=hidden_channels,

)

self._graph_conv_5 = GCNConv(

in_channels=hidden_channels,

out_channels=hidden_channels,

)

self._graph_conv_6 = GCNConv(

in_channels=hidden_channels,

out_channels=hidden_channels,

)

self.linear = Linear(hidden_channels, out_channels)

def forward(

self,

x: torch.FloatTensor,

edge_index: Adj,

edge_weight: OptTensor = None

):

x = self._graph_conv_1(x, edge_index, edge_weight)

x = self._graph_conv_2(x, edge_index, edge_weight)

x = self._graph_conv_3(x, edge_index, edge_weight)

x = self._graph_conv_4(x, edge_index, edge_weight)

x = self._graph_conv_5(x, edge_index, edge_weight)

x = self._graph_conv_6(x, edge_index, edge_weight)

x = self.linear(x)

return x

def train_gcn():

# I'm not sure what should be hidden and out channels, just setting 64 and 256.

# I guess in channel should be 2 because node feateure is (x, y) coordinate.

model = GAIN(in_channels=2, hidden_channels=64, out_channels=256)

criterion = torch.nn.BCELoss()

optimizer = torch.optim.SGD(model.parameters(), momentum=0.9, weight_decay=0.0001, lr=0.1)

data = None # I don't know

# Training loop

for epoch in range(65):

# Clear gradients

optimizer.zero_grad()

# Forward pass

y_hat = model(data.x, data.edge_index)

# Calculate loss function

loss = criterion(y_hat, data.y)

# Calculate accuracy

acc = accuracy(y_hat.argmax(dim=1), data.y)

# Compute gradients

loss.backward()

# Tune parameters

optimizer.step()

# Print metrics every 10 epochs

if epoch % 10 == 0:

print(f'Epoch {epoch:>3} | Loss: {loss:.2f} | Acc: {acc*100:.2f}%')

What I'm struggling with are:

- I don't know how to interpret formula (3) in the code.

- How to construct input X and edge matrices.

- Overall am I implementing it correctly?

Please help me implement it.