Good question! There's actually some ambiguity here: it's possible to consider the lower-dimensional projection with respect to the pixels within a single image or across a dataset of images.

A dataset of images

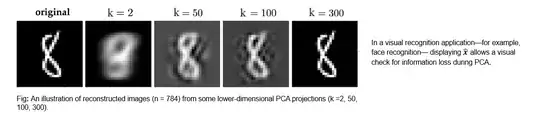

You're probably thinking of the case where you find principal components with respect to a dataset of images. A common example is "eigenfaces".

Each sample corresponds to an image. $\mathbf{x}^i$ corresponds to (the grayscale pixel values) of a single image, and $\bar{\mathbf{x}}$ corresponds to the average (grayscale pixel values) of a single image. Here, $S$ corresponds to the variance between pixels across a dataset of images.

A single image

PCA greedily finds directions in feature space that have the greatest variance across samples. So if you have a single image, you need to split that image into equally shaped chunks. Each chunk in the image is a sample and each pixel within that chunk is a feature.

For example, if you have a 128x128 image, you might choose each row to be a sample. You'd then have 128 samples of chunks, each with 128 features. $S$ then describes the covariance across the rows of the image. You can find an implementation of what I described in this tutorial.