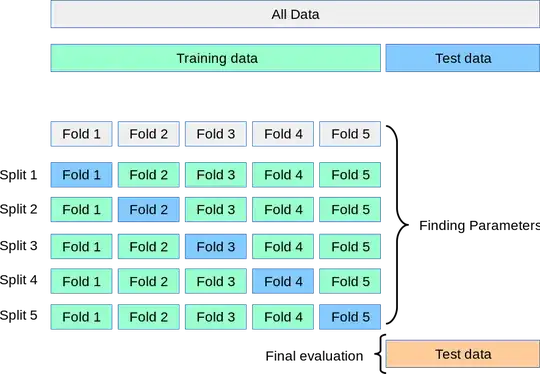

We know that in machine learning the dataset is divided into 3 parts: training data, validation data and test data.

On the other hand, K-fold cross-validation is defined as follows:

the dataset is divided into K number of different sectors. One section is used for testing and the rest for training. The results of these K-iterative tests are then averaged to get the final accuracy.

What happens to validation dataset in K-fold cross-validation? Is there such a dataset?