Q: I wonder if anyone has tried to solve the ARC tasks with one of the state-of-the-art Multimodal LLMs?

Can LLMs that can process graphics input do the following?

(This question is not about the The AI2’s Reasoning Challenge (ARC) dataset, a multiple-choice question-answering dataset, containing questions from science exams from grade 3 to grade 9.)

Background:

I came across a paper from 2019 "On the Measure of Intelligence" by Francois Chollet who is a Researcher at Google, Creator of the Keras Deep Learning Library.

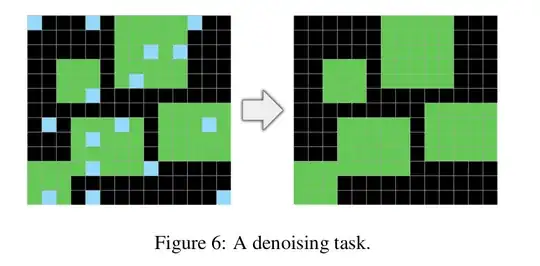

In that paper he proposed the Abstraction and Reasoning Corpus, ARC. Actually you can view this as a Benchmark that consists of solving 400 graphical reasoning puzzles, to test AI systems' abstraction and analogy abilities.

To illustrate, here is an example from the paper:

(A machine must infer what to do (transforming a test picture without seeing a "result" picture on the right) from ~3 similar training imagepairs per task)-

2020 state of the art: In 2020 there was a Kaggle Competition and the 1st place solution got 20% of the 400 task right. (Leaderboard Score in competition was "Percentage still wrong" or something - so lowest score of 79.4% won) The C++ code contains special-purpose image manipulation and inference routines, carefully crafted and combined as described in a write-up by the author "icecuber".

Now it's 2023 and OpenAI is making GPT-4V multimodal generally available.

In April 2023 someone asked a similar question on the OpenAI discussion forum; no answers. That post was written independently from this one, and I just discovered that post right before submitting this post.

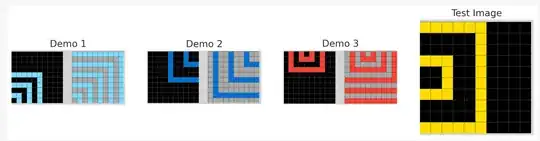

Yesterday I have given ChatGPT-4 Advanced Data Analysis a single example, and the LLM quickly solved the puzzle shown below; sort of. Admittedly, my prompt was in sloppy natural language, and I uploaded 3 badly cropped training images as input.

Task f8c80d96:

With human intelligence I was not able to infer the solution to task f8c80d96 easily. Made 3 wrong guesses.

(Solution: Add 1 vertical yellow line but also replace black background with grey background)

Clearly my attempt is not a systematic study, just practice.

To conclude, I suspect if GPT-4 can solve 1 task, it can solve many more than 20% of those 400 examples, e.g. when using the API with carefully designed prompts and instructions.