I have a simple curve fitting problem in hand. I wrote some code in PyTorch as follows:

class MyDatasetV1(torch.utils.data.Dataset):

def init(self, dataset):

# Initialize a dataset

assert isinstance(dataset, list), '"dataset" must be of "tuple" type'

assert isinstance(dataset[0], torch.Tensor), '"x" must be of "torch.Tensor" type!'

assert isinstance(dataset[1], torch.Tensor), '"y" must be of "torch.Tensor" type!'

self.x = dataset[0]

self.y = dataset[1]

self.length = self.x.shape[0]

def len(self):

# Get the number of elements in entire dataset

return self.length

def getitem(self, index):

return self.x[index], self.y[index]

class MyModelV2(torch.nn.Module):

def init(self, input_size, output_size, hiddens, weights, biases, batchnorms, activations, dropouts):

# Initialize a custom fully-connected model

super(MyModelV2, self).__init__()

assert len(hiddens) + 1 == len(weights), 'Number of hidden layers must match the number of "weights" units/tensors!'

assert len(hiddens) + 1 == len(biases), 'Number of hidden layers must match the number of "bias" units/scalars!'

assert len(hiddens) + 1 == len(batchnorms), 'Number of hidden layers must match the number of "batch normalization" units!'

assert len(hiddens) + 1 == len(activations), 'Number of hidden layers must match the number of "activation" functions!'

assert len(hiddens) + 1 == len(dropouts), 'Number of hidden layers must match the number of "dropout" units!'

self.weights = weights

self.biases = biases

self.batchnorms = batchnorms

self.activations = activations

self.dropouts = dropouts

self.layers_size = [input_size]

self.layers_size.extend(hiddens)

self.layers_size.append(output_size)

self.layers = torch.nn.ModuleList()

def build(self):

# Build a model with given specifications

for index in range(len(self.layers_size) - 1):

layer = torch.nn.Linear(self.layers_size[index], self.layers_size[index + 1])

if self.weights[index]:

self.weights[index](layer.weight)

if self.biases[index]:

self.biases[index](layer.bias)

self.layers.append(layer)

if self.batchnorms[index]:

self.layers.append(torch.nn.BatchNorm1d(self.layers_size[index + 1]))

if self.dropouts[index]:

self.layers.append(torch.nn.Dropout(self.dropouts[index]))

self.layers.append(self.activations[index])

def forward(self, x):

# Forward pass for a given input

for layer in self.layers:

x = layer(x)

return x

def set_weight(weights):

return torch.nn.init.xavier_uniform_(weights)

def set_bias(biases):

return torch.nn.init.zeros_(biases)

torch.manual_seed(7)

model = MyModelV2(1, 1, [64, 64], 3 * [set_weight], 3 * [set_bias], 3 * [False], 3 * [torch.nn.ReLU()], 3 * [False])

model.build()

criterion = torch.nn.MSELoss()

optimizer = torch.optim.Adam(model.parameters())

x = torch.linspace(-10, 10, 1000 * 1).reshape((1000, 1))

y = 0.1 * x * torch.cos(x) + 0.05 * torch.normal(1, 2, size=(1000, 1))

ds = MyDatasetV1([x, y])

ds_loader = torch.utils.data.DataLoader(ds, batch_size=32, shuffle=True)

def get_training_loss(model, training_loader, criterion, optimizer):

Training loop for a given model

model.train()

training_loss = 0.0

for x_train, y_train in training_loader:

optimizer.zero_grad()

y_hat_train = model(x_train)

train_loss = criterion(y_hat_train, y_train)

train_loss.backward()

optimizer.step()

training_loss += train_loss.item()

Calculate the average training loss

training_loss /= len(training_loader)

return training_loss

EPOCHS = 100

for epoch in range(1, EPOCHS + 1):

tr = get_training_loss(model, ds_loader, criterion, optimizer)

print(f'Epoch number: {epoch} - Training error/loss: {tr:.6e}')

def predictor(model, x):

Predict after training for a given model

model.eval()

with torch.no_grad():

x = model(x)

return x

y_hat = predictor(model, x)

plt.figure(dpi=120)

plt.plot(x.numpy(), y.numpy(), 'ro', markersize=1.5, label='(x, y)')

plt.plot(x.numpy(), y_hat.numpy(), 'bo', markersize=1.5, label='(x, $\hat{y}$)')

plt.xlabel('x')

plt.ylabel('y')

plt.tight_layout()

plt.legend()

plt.show()

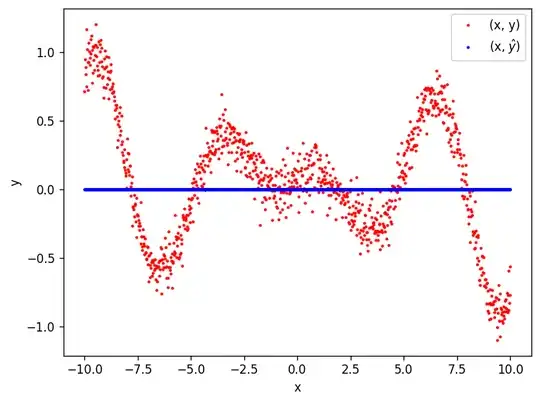

Even though I tried different models, i.e., 32, 32 or 64, 32, 16 neurons at hidden layers, etc., I ended up having a zero prediction from all as shown in the figure below.

I reviewed my model many times, but I could not figure out the issue here. What is wrong with it? Thanks in advance!