No, the weights do not need to add up to one. There isn't really a reason to do that. Weights as "contributions" may not be the best way to think about things here -- you're trying to learn a function that transforms the input values into your desired output values. Say you're learning a linear model and your data is 2D and drawn from a line with slope 2, then you'd want your learned weight to be 2.

Additionally, say you have a feature that is negatively correlated with the output. That is, that a higher value in the input results in a lower outputs value. If all your weights are non-negative, you wouldn't be able to encode this at all!

Finally, as the output would simply be a weighted average of the inputs, the scale of the outputs would be constrained by the scale of the inputs. Consider:

$$f(\mathbf{x}) = \Sigma^d_{i=1} w_i x_i$$

with

$$\Sigma^d_{i=1} w_i = 1$$

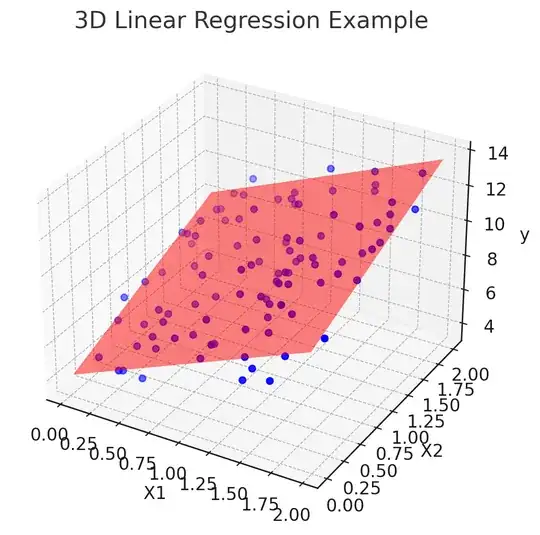

$f(\mathbf{x})$ will always be less than or equal to $\max(\{w_1, ..., w_d\})$. For a simple linear model, you can definitely think of examples where the scale of the output is much larger than that of the input features (for example, number of bathrooms in a house versus its price). Although you can mitigate some of this by rescaling your data (as I will talk about shortly), there is really no reason to artificially constrain your model like this.

A deep neural network can be thought of as stacks of these linear models, with the output of the previous layer being the input of the next layer. Although, these intuitions aren't at all perfectly transferable to deep neural networks, we would still be constraining the expressiveness of the model in a similar way: an increase in an input dimension can't result in a decrease in the output value and the scale of the output is constrained by the scale of the input.

Normalization in Neural Networks

You do, however, often need to perform normalization, but this normalization is done on the data and the outputs of hidden layers instead of the weights. If you add a weight penalty to your linear model for example, you want to make sure that each dimension is in around the same range, to not overly penalize features that tend to have larger weights. In neural networks, you also often normalize weight values for hidden layers, the most common variant is Batch Normalization.

However, the normalization often used in these cases is a bit different from what you mentioned. Also known as standardization, this kind of normalization subtracts each sample by the mean (over a set of samples) and divides by the standard deviation (over that same set of samples).

The exact reason why this is effective in neural networks is an active area of research. A common explanation is batch normalization reduces the effects of internal covariant shift, where changes in weights during training results in changes in the output distribution of a layer, which can harm performance as the next layer "expects" the original distribution. Centering and scaling the input to have zero mean and unit standard deviation reduces these changes. This, however, is disputed by this paper, which argues that batch normalization helps by making the loss landscape smoother.