I am reading Retinaface paper,

RetinaFace: Single-stage Dense Face Localisation in the Wild Jiankang Deng, Jia Guo, Yuxiang Zhou, Jinke Yu, Irene Kotsia, Stefanos Zafeiriou

link: https://arxiv.org/abs/1905.00641

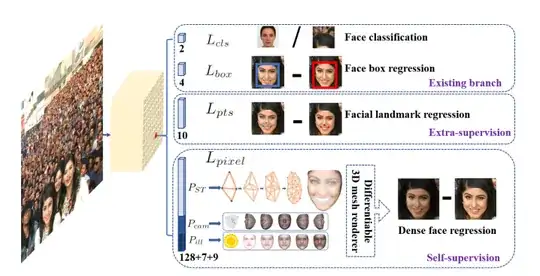

One of the questions we aim at answering in this paper is whether we can push forward the current best performance (90.3% [67]) on the WIDER FACE hard test set [60] by using extra supervision signal built of five facial landmarks.

Question: here what is mean by Extra-supervision and Selfsupervision ?

Also suggest some good resources for understanding this paper better.