TL;DR: Temperature is applied after repetition penalty, so it smoothes out its effect.

They are basically independent hyper-parameters of the decoding, but applied after each other.

Higher temperature makes the output distribution more uniform, so you are likely to get more diverse generations, but at the same time, you risk they will not make sense (in an extreme case, you might even get malformed words).

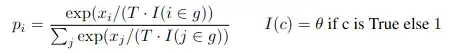

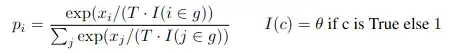

Language models, especially when undertrained, tend to repeat what was previously generated. To prevent this, (an almost forgotten) large LM CTRL introduced the repetition penalty that is now implemented in Huggingface Transformers. It is described in an unnumbered equation in Section 4.1 on page 5:

It discounts the probability of tokens that already appeared in the generated text, making them less likely to appear again.

Discounting already generated words is implemented as a RepetitionPenaltyLogitsProcessor, and temperature is implemented as TemperatureLogitsWarper in the Transformers library. Processors get applied before Warpers. This means that the effect of discounting of already generated tokens gets smoothed by the temperature smoothing.