I have a case where my state consists of relatively large number of features, e.g. 50, whereas my action size is 1. I wonder whether my state features dominate the action in my critic network. I believe in theory eventually it shouldn't matter but considering the sequential nature of RL training I am afraid the state features outweigh the action and its effect will be negligible.

What I already tried is the following:

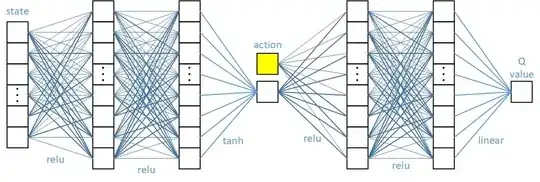

Here where the state output and action are combined I use tanh activation because my action is in [-1, 1]. This led to almost flat performance from the very beginning with no improvement at all. I understand this might be due to vanishing gradients caused by tanh. I also tried the linear activation instead of the tanh, this time average episode return was fluctuating around some value with no signs of learning.

What I am currently testing is stacking the action, say 50 times, to match the number of the state features.

Any other ideas on how to tackle this issue.