I am currently going through the FastAI course and to practise, I wanted to code a neural network that classifies the FashionMNIST dataset from scratch.

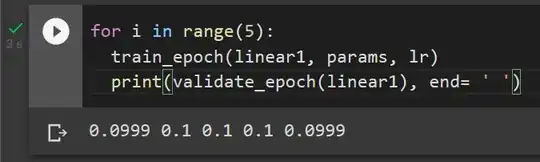

Lately, I've been running into an issue where I get a consistent validation accuracy score of 0.9 sometimes (afer reinitiating my weights and biases), but occasionally it's stuck at 0.1, and does not go anywhere from there.

I tried to start again from zero multiple times, but can't figure out the issue. I would very much appreciate any help :)

Below is my code:

#hide

!pip install -Uqq fastbook

import fastbook

fastbook.setup_book()

#hide

from fastai.vision.all import *

from fastbook import *

matplotlib.rc('image', cmap='Greys')

import torchvision.transforms as transforms

from torchvision.transforms import ToTensor, Lambda

from torch.utils.data import DataLoader

from torchvision import datasets

import torch

import matplotlib.pyplot as plt

from torch.nn import CrossEntropyLoss

tfms = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1037,), (0.3081))])

training_data = datasets.FashionMNIST(

root = 'data',

train = True,

download = True,

transform = tfms,

)

train_x = training_data.data.view(-1, 28*28).float()/255

df = pd.DataFrame(train_x[0].view(28,28))

df.style.set_properties(**{'font-size': '6pt'}).background_gradient('Greys')

target_transform = Lambda(lambda y: torch.zeros(10, dtype=float).scatter_(0, y, value = 1))

train_y = torch.stack([target_transform(y) for y in training_data.targets])

dset = list(zip(train_x, train_y))

dl = DataLoader(dset, batch_size=1024, shuffle=True)

testing_data = datasets.FashionMNIST(

root='data',

train= False,

download= True,

transform = tfms

)

valid_x = testing_data.data.view(-1, 28*28).float()/255

valid_x.shape

valid_y = torch.stack([target_transform(y) for y in testing_data.targets])

valid_y.shape

valid_dset = list(zip(valid_x, valid_y))

valid_dl = DataLoader(dset, batch_size=1024, shuffle = True)

Init params

def init_params(size, std=1.0):

return (torch.randn(size)*std).requires_grad_()

weights = init_params((28*28, 10))

biases = init_params(10, 1)

weights.shape, biases.shape, train_x[0].shape

Predict

def linear1(xb):

return xb@weights + biases

Calculate loss

def cross_entropy_loss(predictions, targets):

predictions = predictions.softmax(dim=1)

cross = -targets*torch.log(predictions)

return cross.sum(1).mean()

cross_entropy_loss(linear1(train_x[0:40]), train_y[0:40])

Calc grad

def calc_grad(model, xb, yb):

preds = model(xb)

loss = cross_entropy_loss(preds, yb)

loss.backward()

Train epoch

lr = 10

params = weights, biases

def train_epoch(model, params, lr):

for xb, yb in dl:

calc_grad(model, xb, yb)

for p in params:

p.data -= p.grad * lr

p.grad.zero_()

def batch_accuracy(xb, yb):

preds = xb.softmax(dim=1).round()

correct = preds.max(1).indices == yb.max(1).indices

return correct.float().mean()

def validate_epoch(model):

accs = [batch_accuracy(model(xb), yb) for xb, yb in valid_dl]

return round(torch.stack(accs).mean().item(), 4)

train_epoch(linear1, params, lr)

validate_epoch(linear1)

for i in range(5):

train_epoch(linear1, params, lr)

print(validate_epoch(linear1), end= ' ')

print(linear1(valid_x[0:15]).softmax(dim=1).round().max(1).indices == valid_y[0:15].max(1).indices)