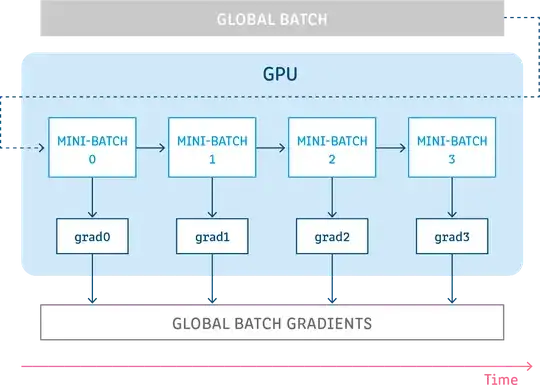

I know that gradient accumulation is (1) a way to reduce memory usage while still enabling the machine to fit a large dataset (2) reducing the noise of the gradient compared to SGD, and thus smoothing the training process.

However, I wonder what causes the noise of the gradient? Is it because of the random sampling strategy on large datasets, intrinsic noise in datasets (like wrong labels) or anything else?

For a small dataset is it okay to do stochastic gradient descent and what might be the motivation for using gradient accumulation techniques on it?