I'm trying to get a convolutional autoencoder to reconstruct images of a dataset with crisp details.

I've read in a couple places that convolutional autoencoders "naturally produce blurry images". This makes sense to me when the images are complex and the bottleneck is small : necessiraly, the autoencoder is learning some lossy compression, so you should expect to lose some details. However, I find it more surprising when working with very simple images.

I created a toy dataset composed of images representing circles, which vary only following three (randomly sampled) parameters : position (x,y) and pixel value. I'm trying out a very simple autoencoder, just to test the blurriness/crispness of details. It's just composed of a downsampling, an upsampling, and a convolution :

Code in Pytorch is :

# "Encoder" part (just a spatial downsampling)

nn.AvgPool2d(kernel_size = 2)

# Decoder part

nn.Upsample(scale_factor=2, mode = "bilinear"),

nn.Conv2d(1, 1, 3),

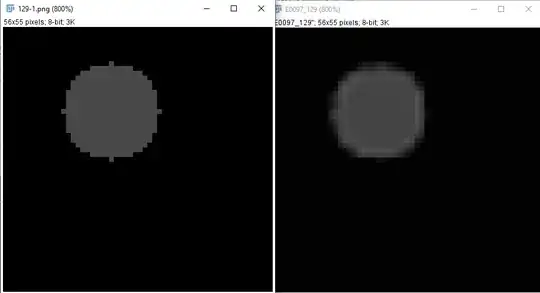

And I train the network on my dataset to reconstruct the original images, with MSE loss. Here is an example of an original image (left), and its reconstructed output at network convergence (right) :

Even though the image is very simple and loss is pretty low, the image is not crisp. I'm guessing this effect becomes even worse when stacking multiple convolutions, downsampling several times in a row, and adding a dense layer as the bottleneck (as in "real" autoencoders).

So I wonder whether part of the bluriness associated with convolutional autoencoders stems from the fact that convolutions are not a great tool to produce crisp details. Is it the case ? Is there another way to upsample images through the decoder (for example, I used to use transposed convolution instead of upsample + conv, and image quality has improved with this change. Is there another similar "step forward" ?) If convolutions are inadequate, how come other generating architectures (modern GANs) are said to be able to produce more crisp images ? Don't those also ultimately rely on convolutions ?