What does this difference in train and test accuracy mean?

3 Answers

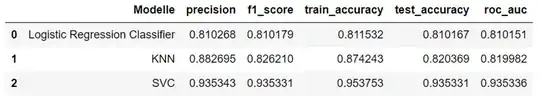

For the Logistic Regression Classifier and SVC, the train_accuracy and test_accuracy are very similar; thus, there is no evidence for over or under fitting. However, the KNN, shows a train_accuracy that is lower than your test_accuracy, which suggests overfitting. Alternatively, depending on your sample size, it is entirely possible that this variation is within the expected range of error.

In machine learning, the model is trained on one dataset (i.e., the training data), but performing model evaluation (i.e., estimate "real world" performance by calculating accuracy or some other metric) on the same dataset that you trained on would be biased. Instead, the evaluation is performed on an independent identically distributed dataset (i.e. the test data) to get an unbiased estimate of model performance. However, it is still important to compare accuracy (or whatever performance metric you choose) between training and testing to assess for over- or underfitting.

Overfitting means that your model "fit" the training data very well and achieves high accuracy on that data. However, the model performs poorly (or less well) on the test data. This is because the model not only "fits" the signal in the training data but also the noise. The problem is that noise is random and it will surely be different in the test set. Therefore, the model doesn't generalize well to other datasets.

Underfitting means that the model performs poorly on both datasets. This is typically because the model does not have the "expressivity" or "capacity" to learn the signals within the data or that the data is random and has no "signal" to speak of (and, therefore, nothing from which to learn).

- 1,037

- 1

- 4

- 27

From the roc_auc metric it does not show the model is in the overfit zone.

It tells that on average of 10 predictions 8 predictions could be right and 2 predictions could go wrong.

Now , it depends on the application domain to decide the accuracy you are expecting from the model

- 11

- 2

Actually, in the strict sense, you cannot decide overfitting here, because overfitting is tested using losses, not metrics like accuracy.

- 1,363

- 8

- 12