I'm attempting to program my own system to run a neural network. To reduce the number of nodes needed, it was suggested to make it treat rotations of the input equally.

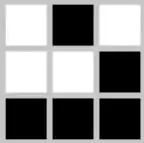

My network aims to learn and predict Conway's Game of Life by looking at every square and its surrounding squares in a grid, and giving the output for that square. Its input is a string of 9 bits:

The above is represented as 010 001 111.

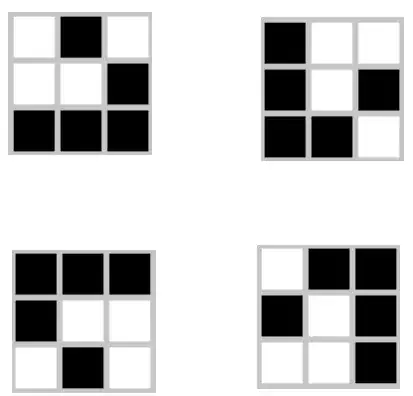

There are three other rotations of this shape however, and all of them produce the same output:

My network topology is 9 input nodes and 1 output node for the next state of the centre square in the input. How can I construct the hidden layer(s) so that they take each of these rotations as the same, cutting the number of possible inputs down to a quarter of the original?

Edit:

There is also a flip of each rotation which produces an identical result. Incorporating these will cut my inputs by 1/8th. With the glider, my aim is for all of these inputs to be treated exactly the same. Will this have to be done with pre-processing, or can I incorporate it into the network?