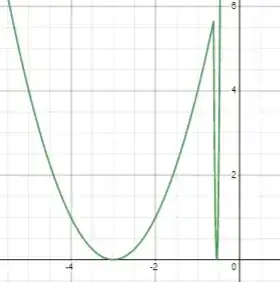

there is one problem which bugs me quite a long time, it is the non-convex loss shape (multiple minima, e.g. shown here) of neural networks which use a quadratic loss function.

Question: Why is a “common” AI problem usually non-convex with multiple minima, although we are using e.g. quadratic loss functions (which is in lectures usually drawn as a simple,convex,quadratic function such as x^2)?

My guess:

Is it because we are feeding the loss function with our highly non-linear model-output and therefore the resulting total loss surface is highly non-linear/ non-convex? Specifically, the quadratic loss is just approximating the infinitesimal small neighbourhood around a specific point (minibatch) as quadratic. Is that guess correct? This would imply, that highly-non linear / very deep and complex models have a highly-non linear resulting loss-surface, while shallower models have less minima and a one-layer network has a convex shape ?