I am trying to retrain ResNet-50 for iris flower classification in tensorflow (TensorFlow version: 2.3.0) using the following code

import tensorflow as tf

import cv2, random

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

from random import shuffle

from IPython.display import SVG

import numpy as np # linear algebra

import pandas as pd

import shutil

import matplotlib.pyplot as plt

%matplotlib inline

from IPython.display import Image, display

from sklearn.model_selection import train_test_split

import os

print(os.listdir("./iris recognition/flowers"))

labels = os.listdir("./iris recognition/flowers")

num_classes = len(set(labels))

IMAGE_SIZE= 224

Create model

model = tf.keras.Sequential()

model.add(tf.keras.applications.ResNet50(include_top=False, weights='imagenet'))

model.add(tf.keras.layers.GlobalAveragePooling2D())

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Dense(num_classes, activation='softmax'))

Do not train first layer (ResNet) as it is already pre-trained

model.layers[0].trainable = False

Compile model

from tensorflow.python.keras import optimizers

sgd = optimizers.SGD(lr = 0.01, decay = 1e-6, momentum = 0.9, nesterov = True)

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

train_folder = './iris recognition/flowers'

image_size = 224

data_generator = tf.keras.preprocessing.image.ImageDataGenerator(preprocessing_function=tf.keras.applications.resnet50.preprocess_input,

horizontal_flip=True,

width_shift_range=0.2,

height_shift_range=0.2,

validation_split=0.2)# set validation split

train_generator = data_generator.flow_from_directory(

train_folder,

target_size=(image_size, image_size),

batch_size=10,

class_mode='categorical',

subset='training'

)

validation_generator = data_generator.flow_from_directory(

train_folder,

target_size=(image_size, image_size),

batch_size=10,

class_mode='categorical',

subset='validation'

)

NUM_EPOCHS = 70

EARLY_STOP_PATIENCE = 5

from tensorflow.python.keras.callbacks import EarlyStopping, ModelCheckpoint

cb_early_stopper = EarlyStopping(monitor = 'val_loss', patience = EARLY_STOP_PATIENCE)

cb_checkpointer = ModelCheckpoint(filepath = './working/best.hdf5',

monitor = 'val_loss',

save_best_only = True,

mode = 'auto')

import math

fit_history = model.fit(

train_generator,

steps_per_epoch=10,

validation_data=validation_generator,

validation_steps=10,

epochs=NUM_EPOCHS,

callbacks=[cb_checkpointer, cb_early_stopper])

model.load_weights("./working/best.hdf5")

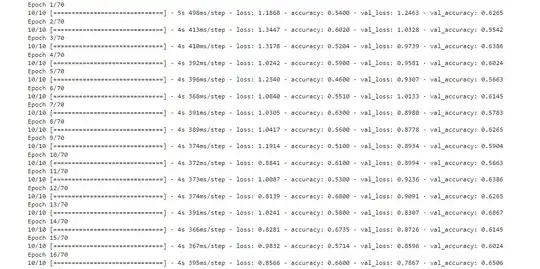

I tried to change the number of epochs , steps_per_epoch and validation_steps however the model accuracy does not improved as shown in

After trying several essais while changing batch size from 32, 64 and 128.For each essai I tried with and without using :

steps_per_epoch=10.

sgd = optimizers.SGD(lr = 0.01, decay = 1e-6, momentum = 0.9, nesterov = True)

The best accuracy I achieved is 72.29% in which the training was the most stable which was when using batch size=128 as shwon in

Any suggestions please?!