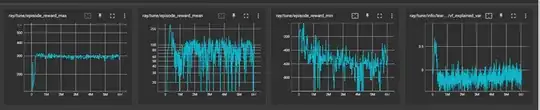

I have trained an RL agent (PPO) for 6 million steps to solve the OpenAI gym LunarLander-v2. Surprisingly, the agent performs best already after 320K steps and is getting worse after that. In the tensorboard log, I can see that the mean, min reward and explained variance do have the highest values at 320k training steps.

I have seen this with stable-baselines and rllib and with other environments as well.

I am wondering why this is the case. Is that a normal behaviour in reinforcement learning? Or do I have to modify some training parameters to continue improving the RL agent?

I would like to see that the agent is increasing the min, mean reward so that it reaches almost the max reward. Is that realistic?