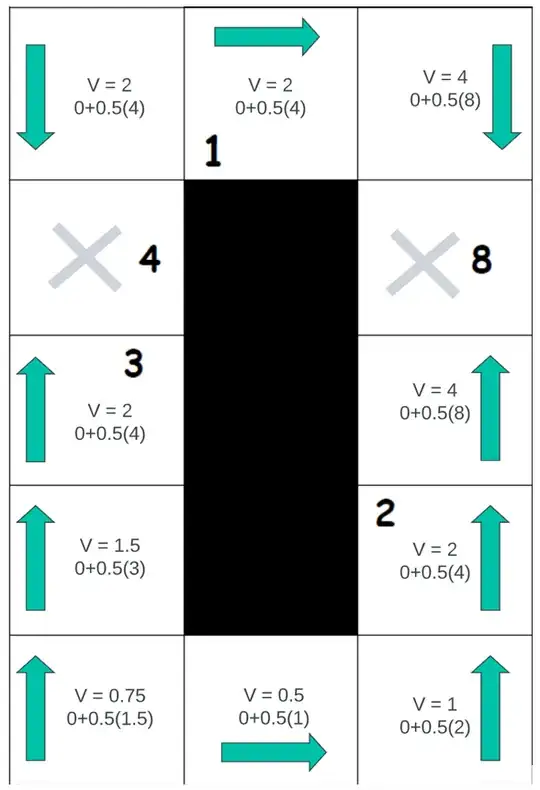

I am practicing the Bellman equation on Grid world examples and in this scenario, there are numbered grid squares where the agent can choose to terminate and collect the reward equal to the amount inside the numbered square or they can choose to not do this and just move to the state grid square.

Since this is a determinisitc grid, I have utilised the following Bellman equation: $$V(s) = max_a(R(s,a)+\gamma V(s'))$$

Where $\gamma=0.5$ and any movement reward is $0$, since this will allow the agent to have a balance of thinking long-term and short-term.

I am trying to understand how you would determine whether it is better for the agent to terminate at the state with the number $3$ or to continue to the state with a number $4$ to collect the more reward? I have determined and marked (X) the terminal states, where with my current calculations, I feel the agent should exit.