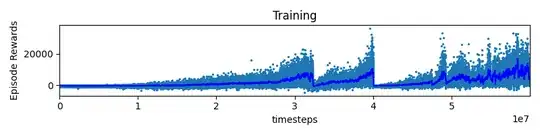

I am training a model using A2C with stable baselines 2. When I increased the timesteps I noticed that episode rewards seem to reset (see attached plot). I don´t understand where these sudden decays or resets could come from and I am looking for practical experience or pointers to theory what these resets could imply.

Asked

Active

Viewed 613 times

2

-

could you please add some more information, e.g. what environment are you using, what is the reward space for that env, what exactly is the plot showing? – pi-tau Aug 14 '23 at 09:31

2 Answers

0

i've seen this in learning using deep Q network. some tips may help you came over this problem :

- use some remembering mechanisms like replay buffer. some times agent forget what it has been learnt. reply buffer remembers the agent what he saw at several episodes ago.

- something else that worked for me was changing the optimizer. As DQN article says , using RMSprop is very useful for learning neural network based agents.

if these tips dont help you, please give more information about your agent.

reza karbasi

- 11

- 2

0

I ran into this and learned that not only is observation normalization is as important as reward normalization.

The y-axis is showing this env reward is much higher than 1.0

Try rescaling your env reward such that it always falls within the range -1 to 1.

The reason why reward normalization is required is it affects the policy loss scaling. If you look at your policy loss you will also notice it much higher than 1.0. A policy loss that high makes training the neural network unstable

Pyrolistical

- 101

- 1