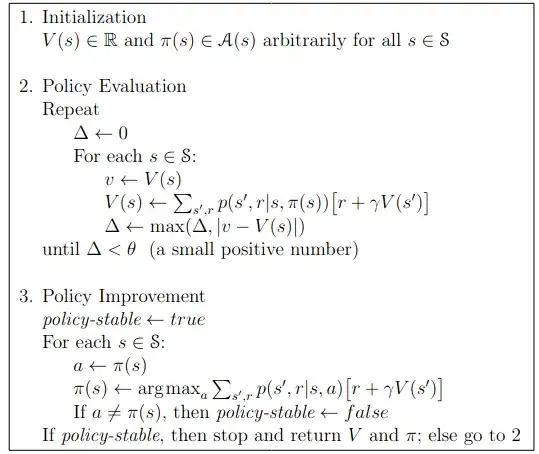

In Sutton and Barto's book (Reinforcement learning: An introduction. MIT press, 2018), the algorithm "Policy Iteration" is:

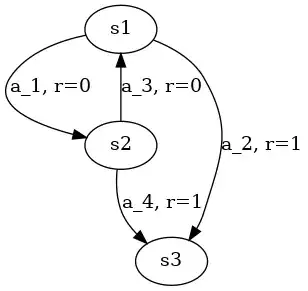

Here, $V(s)$ is initialized arbitrarily, meaning that I can choose anything I want. Moreover, I think nothing is stated about $\gamma$ here so we can consider undiscounted environments where $\gamma = 1$. Now suppose we use the following environment:

If I initialize:

- $V(s_1) = 10$

- $V(s_2) = 10$

- $V(s_3) = 0$

- $\pi(s_1) = a_1$

- $\pi(s_2) = a_3$

With this, it appears that the "Policy Evaluation" part will not have any effect and the algorithm will immediately stops, outputing a policy where the optimal actions are $\pi(s_1) = a_1$ and $\pi(s_2) = a_3$. What am I missing ?

EDIT: I made a toy repository to reproduce, if you want to tweak the numbers of point out something I misunderstood: https://github.com/Gregwar/policy_iteration_initialization/blob/master/policy_iteration.py