Vanilla policy gradient has a loss function:

$$\mathcal{L}_{\pi_{\theta}(\theta)} = E_{\tau \sim \pi_{\theta}}[\sum\limits_{t = 0}^{\infty}\gamma^{t}r_{t}]$$

while in TRPO it is:

$$\mathcal{L}_{\pi_{\theta_{old}}(\theta)} = \frac{1}{1 - \gamma}E_{s, a \sim \pi_{\theta_{old}}}[\frac{\pi_{\theta}(a|s)}{\pi_{\theta_{old}(a|s)}}A^{\pi_{\theta_{old}}}(s,a)]$$

There exists some problems of vanilla policy gradient, such that distance dismatch between parameter space and policy space and suffering poor sample efficiency.

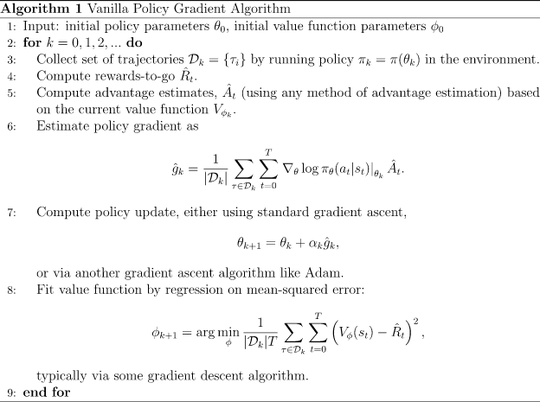

For tackling this problem, TRPO introduces importance sampling for improving this. However, when I compared the pseudocode of two algorithms, I don't see any obvious evidence that aids the point. They all firstly sampled multiple trajectories under the current policy. The former then just uses the estimated gradient of loss function to update the parameter $\theta$. The latter also uses the estimated gradient but under the KL constraint to update the parameter $\theta$. It looks like they are almost the same procedure but with some subtle difference of the optimization process.

My question is - should it be more accurate to say that importance sampling just increases the stability of the sequential decision making process, or rather, avoid update too aggressively, but not directly improves the sample efficiency?

Pseudocode of two algorithms (source from OpenAI spinning up document)