I'd like to design a deep learning architecture in which the output of a primary neural network $M_{\theta}$ determines which neural network $N^i_{\alpha}$ in a set of secondary networks $\mathcal{N}$ to use next. For example, $M_{\theta}$ could be a multiclass classifier, where the predicted class determines $N^i_{\alpha}$. The networks may have different dimensions and activation functions. Is there a name for this type of architecture?

Asked

Active

Viewed 120 times

0

1 Answers

1

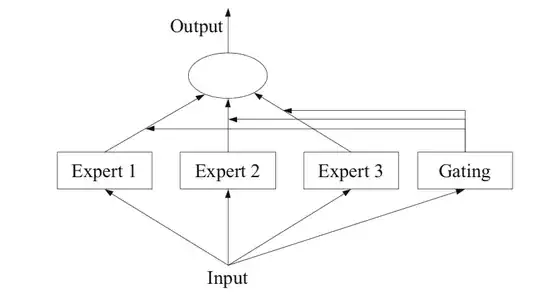

Mixture of Experts might be what you are looking for.

A Mixture of Experts model (MoE), divides a task into subtasks and designs seperate models for each of the tasks (This would be N in your case). It also defines a gating model to decide which expert to use, and during inference it uses the gating model output to pool/select predictions and makes the final decision.

DKDK

- 339

- 1

- 5