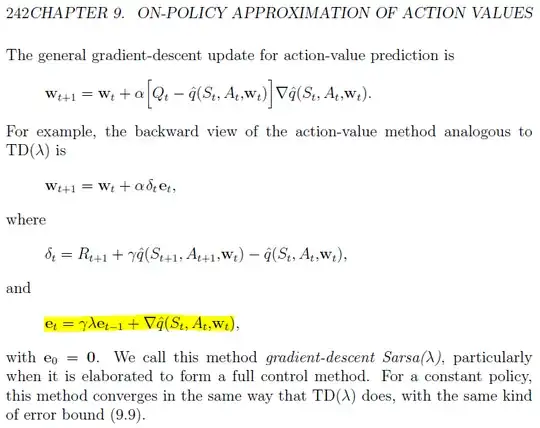

I'm implementing the Watkins' Q(λ) algorithm with function approximation (in 2nd edition of Sutton & Barto). I am very confused about updating the eligibility traces because, at the beginning of chapter 9.3 "Control with Function Approximation", they are updated considering the gradient: $ e_t = \gamma \lambda e_{t-1} + \nabla \widehat{q}(S_t, A_t, w_t) $, as shown below.

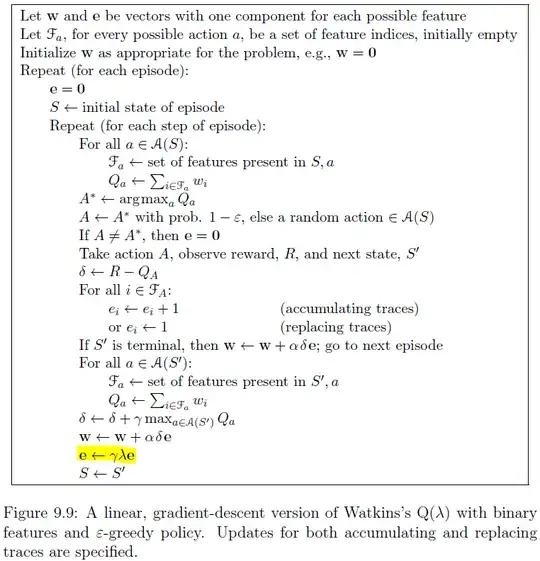

Nevertheless, in Figure 9.9, for the exploitation phase the eligibility traces are updated without the gradient: $ e = \gamma \lambda e $.

Furthermore, by googling, I found that the gradient is simplified with the value of the i-th feature: $ \nabla \widehat{q}(S_t, A_t, w_t) = f_i(S_t, A_t)$.

I thought that, in Figure 9.9, the gradient is not considered because in the next step the eligibility traces are increased by 1 for the active features. So, the +1 can be seen as the value of the gradient, as I found on google, being binary features. But I'm not sure.

So, what is (and why) the right rule to update the eligibility traces?